|

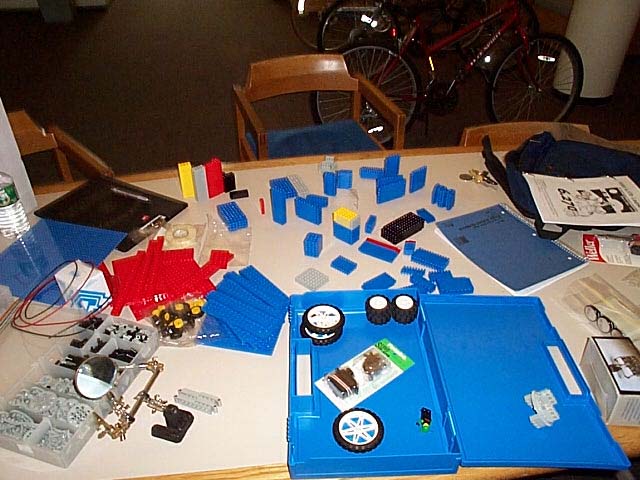

In one month, we went from a mountain of gears, axels, sensors and wheels to a robot that could recognize that start of a 60 second competition round, orient itself using light sources and move blocks around the competition table. |

|

|

|

|

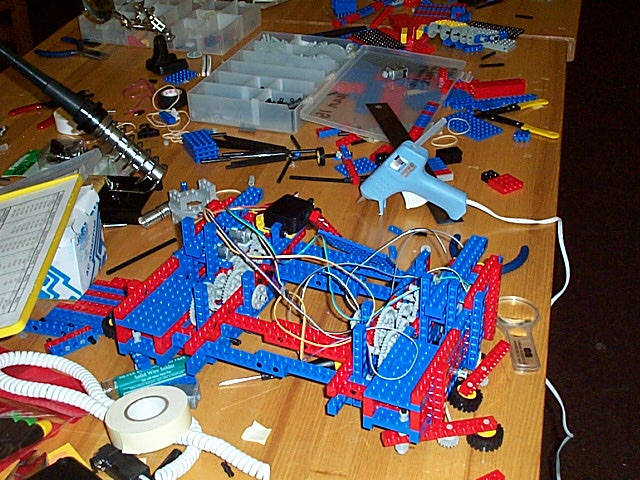

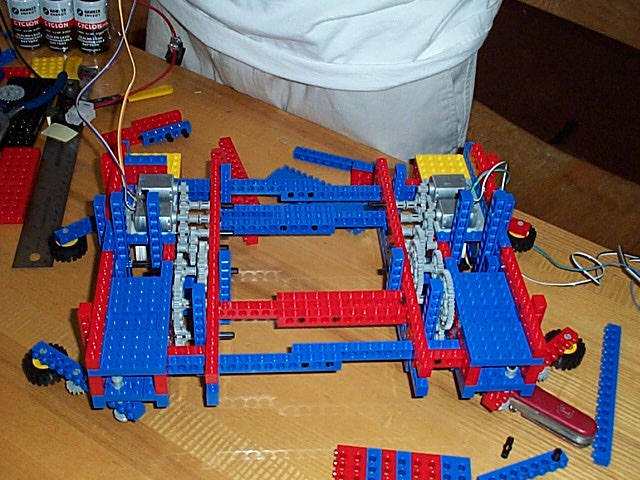

Initially, we had a wall system that would be raised and lowered. We started building a massive structure capable of moving our walls and sturdy enough to stand the vertical motion. However, this proved inefficient and we then built a second chassis (left) whose entire middle section was a passage/cage for the blocks we wanted to move. | |

|

|

||

|

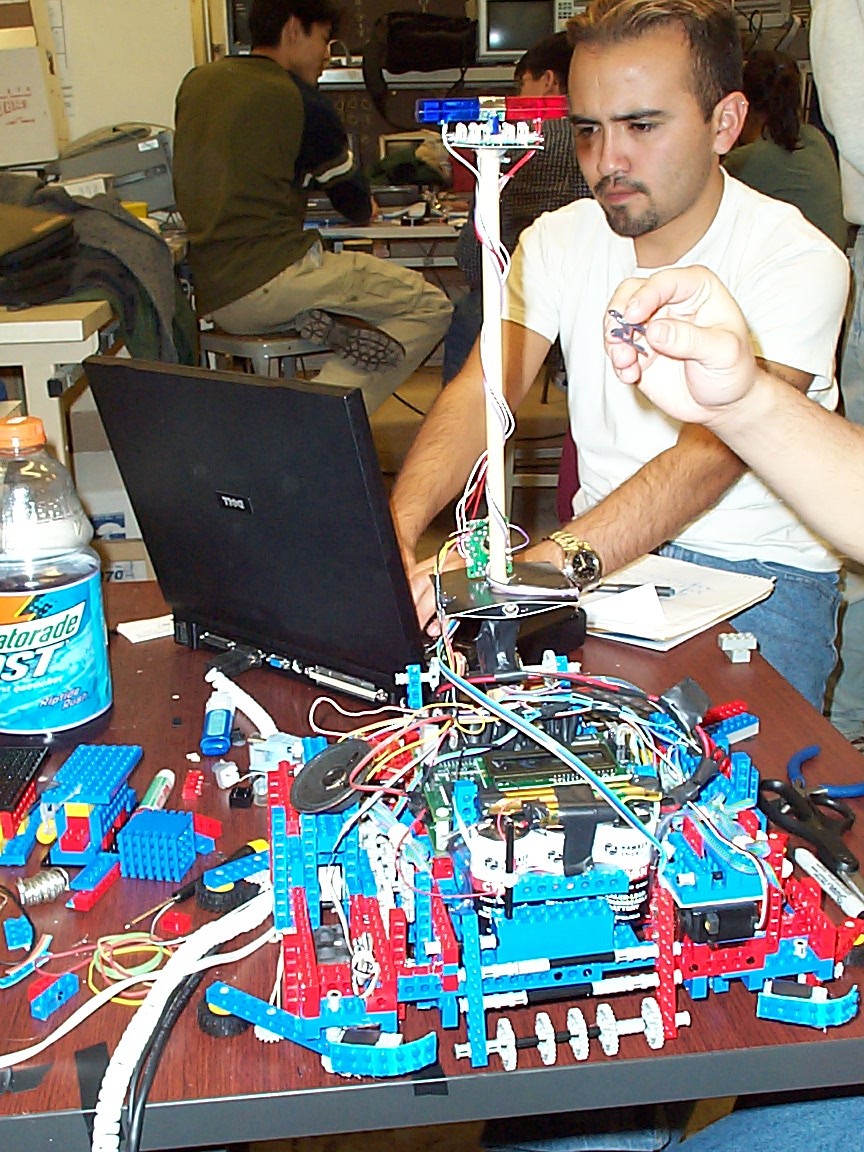

After finishing the Lego chassis, we added four bump sensors, two in the front and two in the rear. These would aid us in knowing when we reached a wall and needed to turn. Furthermore, we added two phototransistor sensors that, paired with powerful LED would enable us to determine if the car was moving on a dark or light area of the table. Finally, we incorporated four CDS cells on our dowel so that we could orient at the start of each round. These cells would be calibrated at the beginning of the competition by reading the light intensity from four different directions. Then, once the round starts and the robot is facing a random direction it determines which way it is facing by obtaining new values from each of the four sensors. It then compares them to the saved calibration readings. |

|

|

| All the logic required for the robot to orient itself and act accordingly is programmed in JAVA and downloaded into a motherboard built by Compaq and running at 200 MHz. All the motors and sensors are connected to this motherboard (left) which utilizes all this data to perform tests and behave "intelligently." | ||

|

|